Owning Quality

Evolving QA from 'compliance based' testing to 'team ownership'

Scott Hatch - June 2013

Recognize this?

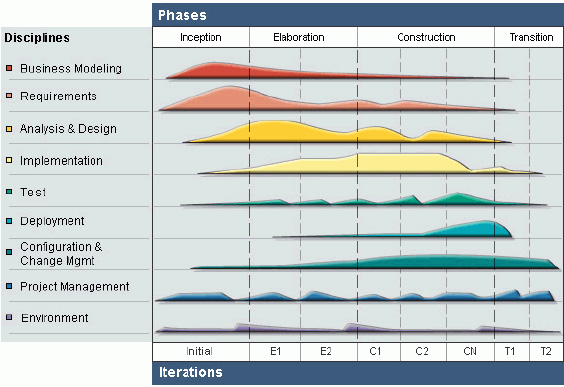

What are some software development approaches?

Waterfall-ish RUP style

- Inception

- Elaboration

- Construction

- Transition

Agile / Scrum Software Development

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

- That is, while there is value in the items on the right, we value the items on the left more.

Agile XP

- Test Driven Development, lots of unit tests

- Code paring partners

- Scripted deployments

- Build servers

- Continuous refactoring

- Embedded product team member

But... Quality ≠ Test

“Quality is not equal to test. Quality is achieved by putting development and testing into a blender and mixing them until one is indistinguishable from the other.”

“Testing must be an unavoidable aspect of development, and the marriage of development and testing is where quality is achieved."

Compliance based compared to team ownership

Definition of Quality Assurance: “A program for the systematic monitoring and evaluation of the various aspects of a project or service to ensure that standards of quality are being met"

Definition of Quality Control: “An aggregate of activities (as design analysis and inspection for defects) designed to ensure adequate quality especially in manufactured products"

Moving towards team ownership: “The goal of engineers with 'Testing' in their title should be to improve the productivity of those who write feature code. Testing must not create friction that slows down innovation and development."

Let's talk about product needs...

- Fast. Bring features to market Fast. Fail fast.

- Fast is measured in days or hours, not weeks or months.

- The 'happy paths' work well

- Don't let perfect be the enemy of good

- Can we afford to get it 'mostly right' as a MVP (Minimum Viable Product/Feature)?

- Can we accept MVPs, because we iterate fast as a team, and can make adjustments quickly?

- Can we include A/B testing, with measurable business goals? The more granular, the better.

Small things can add up to

'Continuous Quality'

- Monitoring tools, like Exceptional Notifier, or New Relic

- Pair programming or pull request code reviews

- Risk based planning for exploratory testing

- Co-locate team members as much as possible

- Setting Expectations and resetting expectations to build trusted partnership with Product

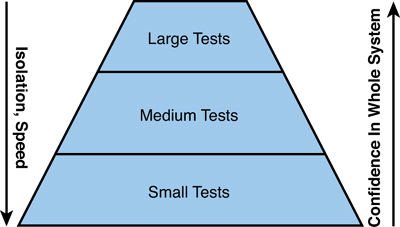

Testing Sizes

* How Google tests Software, page 44

“Small tests lead to code quality. Medium and large tests lead to product quality."

-more-

The question a small test attempts to answer is, “Does this code do what it is supposed to do?”

The question a medium test attempts to answer is, “Does a set of near neighbor functions interoperate with each other the way they are supposed to?”

The question a large test attempts to answer is, “Does the product operate the way a user would expect and produce the desired results?”

Projects are encouraged to maintain a healthy mixture of test sizes among their various test suites. It is considered just as wrong to perform all automation through a large end-to-end testing framework as it is to provide only small unit tests for a project

What about integration with

other services?

- What if testing suites could be defined to run and check impact of 3rd party (internal and external APIs)?

- What if we had 'providers' of internal APIs configure build severs to run partial test suites of consumer apps to find any regressions BEFORE releasing a new API?

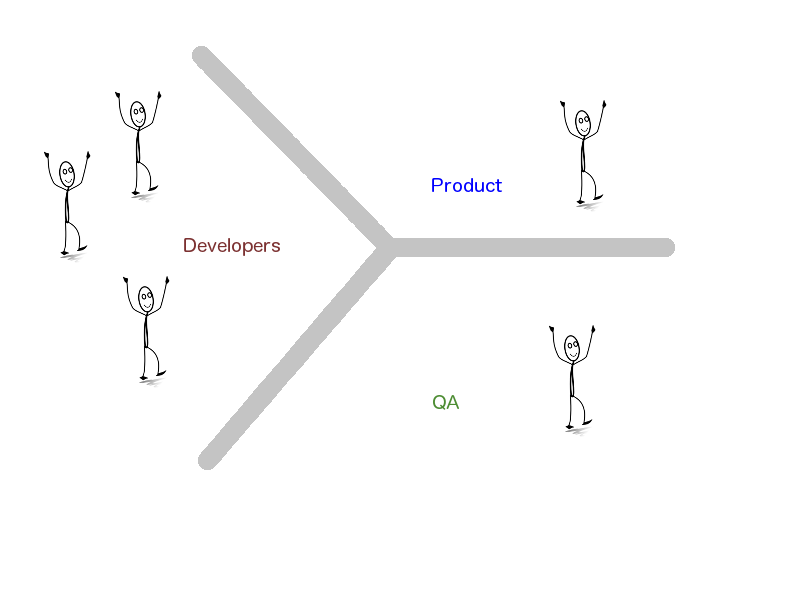

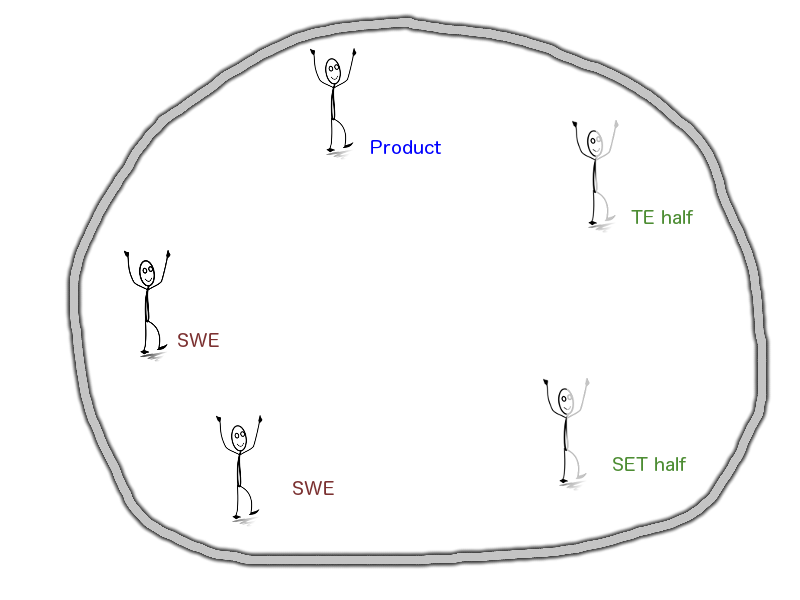

So what does our team look like?

SWEs, SETs, TEs

- SWEs - Software Engineers

- SETs - Software Engineers in Test

- TEs - Test Engineers

-more-

SWE (Software Engineers)

- Write functional code to deliver user stories

- Work in a test driven development model

- Create tests for code, javascript, etc.

- Create spec tests that may test with real browsers, or headless browsers

- They build mocks or fakes when needed for unit tests to interact with

-more-

SWE -continued-

- SWEs fix the bugs that are found in their products

- They submit ‘Change Sets’ of code

- They perform code reviews or work in Pair Programming

- SWEs own the quality of the product they build, there is no 2nd team acting as a QA last check safety net.

-more-

SETs (Software Engineers in Test)

- SETs work to make SWEs more efficient.

- Setup Agile tools like build servers (Jenkins), deploy scripts (shell scripts, Capistrano scripts, test frameworks (like rspec/capbybara/selenium) javascript test frameworks (jasmine)

- Contribute to building, reviewing, and extending unit tests

- May build mocks and fakes for unit tests to interact with

- May build custom tools when needed (java/ruby test code?)

-more-

SET -continued-

- May contribute to functional code occasionally

- Perform exploratory testing

- Test Certified Mentors

- SETs have a skillset on par with the SWEs

-more-

TE (Test Engineers)

- TEs are responsible for the overall width of testing and coverage

- Focus on thinking like the user

- Contribute to automated tests

- Perform risk analysis of features / products in order to guide the team in where to test, and how much to test based on what is most important

- Coordinate and perform organized, intelligent exploratory testing

- TEs are a rare commodity, considered highly valuable and 'on loan' to teams for a while

Moving forward:

What DEV needs to hear to make this transition:

- Crawl, Walk, Run

- Focusing on the development environment that team members use is really critical. Make it dead easy to get started to check out code, edit code, test code, view code coverage, run code, debug code, and then deploy code. Take away the pain from each of these steps and your developers will be productive and you will be on your way to producing high-quality software on time.

- An excellent way to 'disciple' this is to embed visiting engineers for a few weeks. Ex. AOL AIM team -> MQ Discover.

What QA needs to hear to make this transition:

- It is okay for a team to own quality by themselves

- Benefit: This will get us past the 'safety net' relationship

- Stakeholders need to be in agreement that 'Faster to Market' is valued over other concerns, including 'fully regression tested'.

- Benefit: We need to trust our automated suites. We want to move quality responsibility to the team, thus creating the incentive to write automated tests. We know that occasionally a bug may be released, but when it is found, it will be fixed it the next release.

-more-

- Testers (SETs and TEs) become a scarce, valuable, and sought after resource. There are assigned as a on-loan status, not a entitlement to the team.

- Benefit: This also facilitates movement of SETs and TEs from project to project, which not only keeps them fresh and engaged, but also ensures that good ideas move rapidly around the company. One can imagine the downside of losing such expertise, but this is balanced by a company full of generalist testers with a wide variety of product and technology familiarity.

-more-

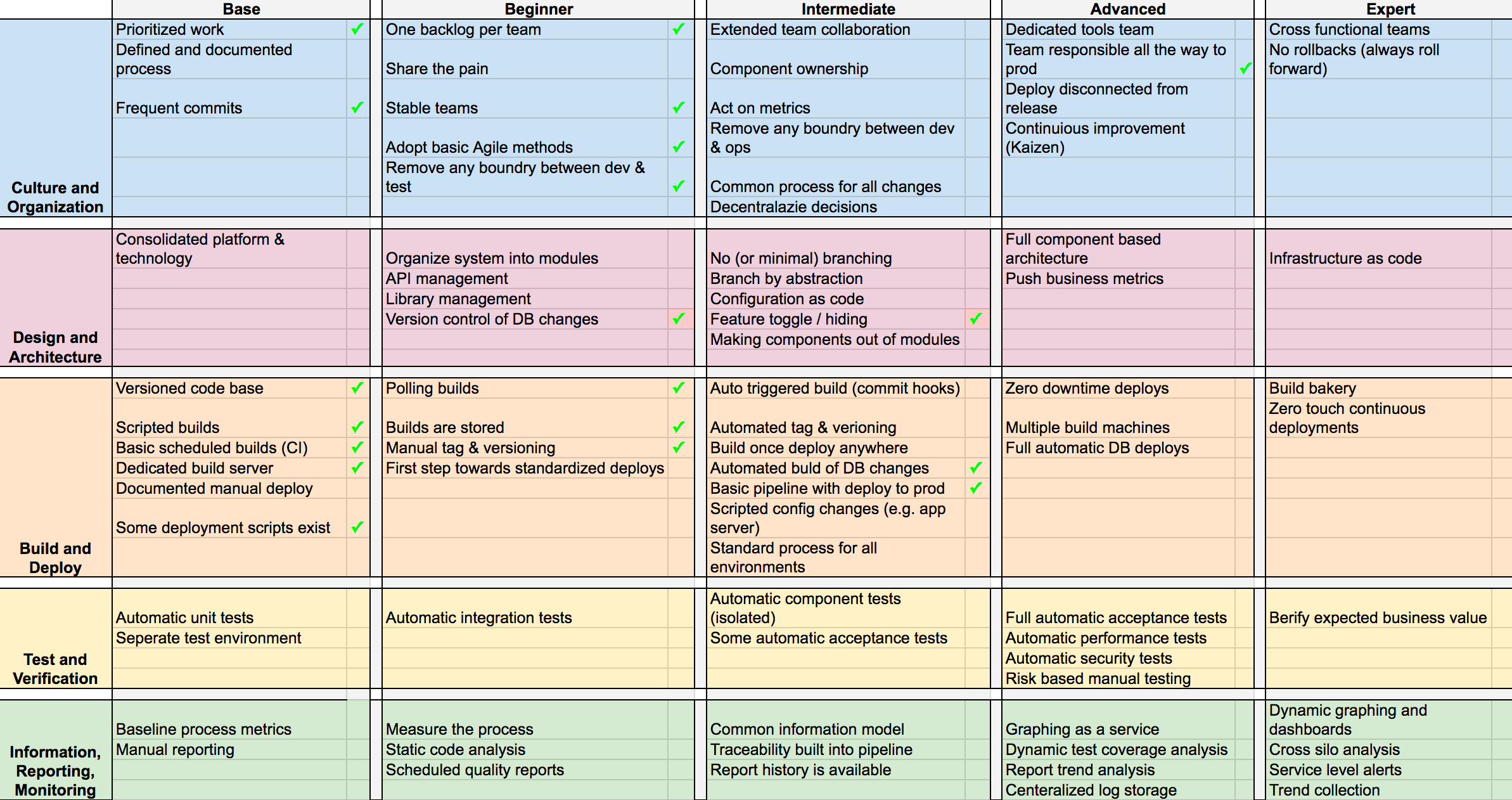

Continuous Delivery maturity model

“How we evaluate ourselves, and improve."

-more-

- Implement a 'Test Certified' ladder program.

- Benefit: Can we get developers to take testing seriously if we make it a prestigious matter? If developers follow certain practices and achieve specific results, can we say they are “certified” and create a badge system that provides some bragging rights?

-more-

- Level 1

- Setup test coverage

- Setup continuous build

- Classify your tests as Small, Medium, and Large

- Identify nondeterministic tests

- Create a smoke test suite

- Level 2

- No releases with red tests

- Require a smoke test suite to pass before a submit

- Incremental coverage by all tests >= 50%

- Incremental coverage by all tests >= 10%

- At least one feature tested by an integration test

- Level 3

- Require tests for all non-trivial changes

- Incremental coverage by small tests >= 50%

- New significant features are tested by integration tests

- Level 4

- Automate smoke tests before submitting new code

- Smoke tests should take less than 30 minutes

- No nondeterministic tests

- Test coverage >= 40%

- Test Coverage from small tests >= 25%

- All significant features are tested by integration tests

- Level 5

Add a test for each nontrivial bug fix

- Actively use available analysis tools

- Total test coverage should be at least 60%

- Test coverage from small tests alone should be at 40%

In a culture where testing resources were scarce, signing up for this program got a product team far more testers than it ordinarily would have merited.

They got lots of attention from good testers who signed up to be Test Certified Mentors.

How do we know if we are succeeding?

- Look at the health of code releases to production to evaluate the quality on a given product.

- Cost in time & effort to perform a release

- Frequency of releases

- Occurrences of Severity 1 bugs in prod

- Frequency of production rollbacks

- These become the front line indicator of how well a team is performing.

Team ownership of quality!

Further Learning:

This presentation:

- http://scooterhat.github.io

AOL Safari books:

- Login to: http://safari.aol.com

- Then: http://techbus.safaribooksonline.com/9780132851572

Google Resources:

- http://googletesting.blogspot.com

- https://code.google.com/p/bite-project

Thanks to:

Technologists:

- reveal.js - this awesome presentation software

- James Whittaker (& team) at 'big G' for challenging the status quo

- Our Company - for always wanting to improve

Attributions:

- Most all of the quotes referenced in this presentation are from "How Google Tests Software - James A. Whittaker.Copyright 2012 Pearson Education, Inc."

- The definitions are from "http://www.merriam-webster.com/"

- RUP chart from http://www.ibm.com/developerworks/webservices/library/ws-soa-term2/rup.jpg